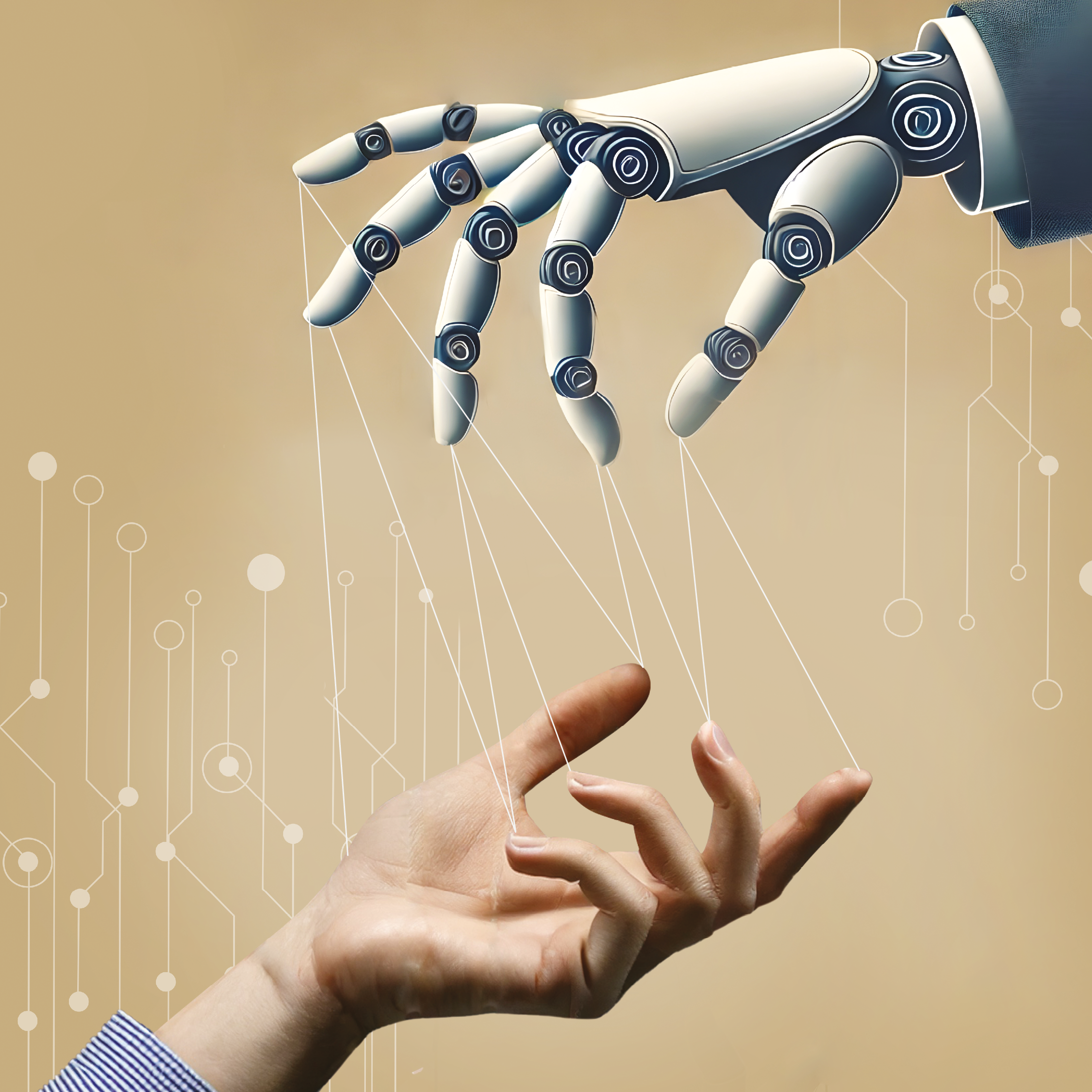

Apart from well-known challenges such as the risk of discrimination or the potential exposure of dangerous information, researchers from Oxford1have pointed out two other key threats: excessive reliance on AI models and their ability to persuade. These risks are particularly high because experiments show that human trust in models is greater than their actual accuracy. The matter is further complicated by the fact that models – despite their ignorance – speak like experts: they explain complex issues in detail and use complicated words. So people trust them more than they trust human experts.

Researchers from the Massachusetts Institute of Technology (MIT) proved this in an experiment where participants recognized emotions and assessed their intensity. At one stage, they could change their decision based on a suggestion that came from artificial intelligence or another person. The researchers provided them with information about the source of the tip. Participants changed their minds based on the suggestions more frequently when they thought they came from AI – even if a human was actually behind them. It also turned out that participants changed their minds just as rarely whether they were advised by a person or thought they were being advised by a person, while actually being advised by AI.