Andrew Ng’s metaphor “AI is the new electricity” has perfectly described over the years the ubiquitous yet often invisible force that drives technological change. Andrej Karpathy’s argument is significantly more radical: artificial intelligence has ceased to be merely useful, like electricity from an outlet, becoming something fundamentally different—a new universal computer. It has become a platform on which we will create, not just a force that feeds existing systems. We are the first generation who learns to code using the most natural interface: the English language.

The entry threshold, which for decades was defined by knowledge of complex syntax, is now disappearing. Karpathy speaks plainly and invites us to a new reality: welcome to the era of Software 3.0.

Who is Andrej Karpathy?

And when Karpathy speaks, it’s really worth listening… He himself has journeyed from researching convolutional and recurrent networks at Stanford University, where he co-created significant works in the field of computer vision and image-language integration, to co-founding the initial research team at OpenAI in its original, non-profit mission, all the way to a key position as head of Tesla’s AI department.

It was there, while he spent five years leading the team that developed Autopilot, where he learned how vast the gap can be between an impressive demo and a finished product ready for the mass market. He understood that the real challenge is not in creating a model that works in 95% of cases, but in handling an infinite number of unusual situations that determine safety. At the beginning of 2023, Karpathy rejoined OpenAI, but his second stint with the company turned out to be brief. At the beginning of 2024, Karpathy once again left OpenAI to focus on his own independent projects.

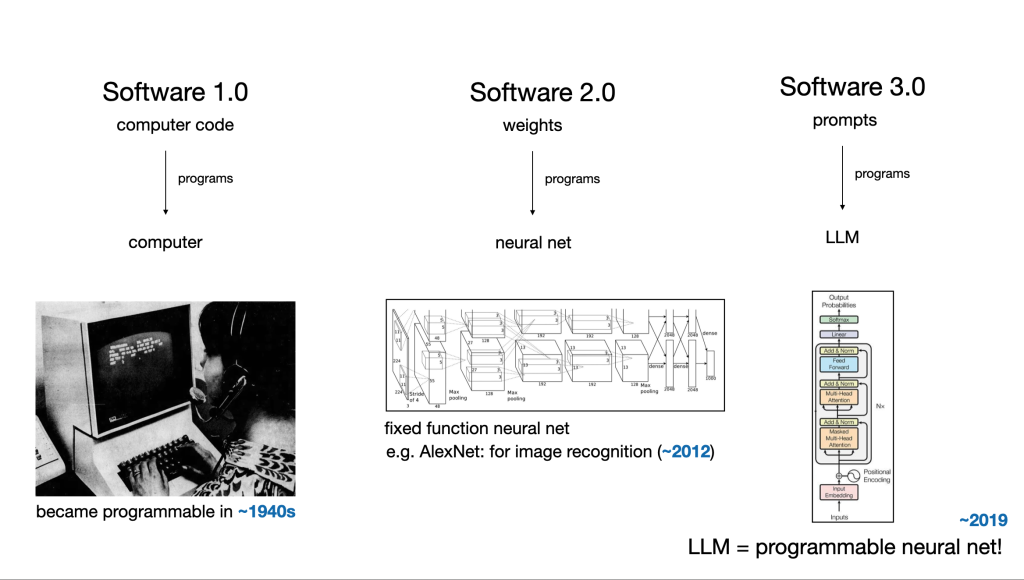

Three software eras

To fully understand the scale of the current transformation, Karpathy suggests looking at the history of technology in the context of software development. Each era represents a fundamentally different paradigm of creating and thinking about code.

Software 1.0 was a classical world, governed by clear, deterministic logic. The programmer was a craftsman who, using languages like C++ or Java, line by line, built complex mechanisms from loops, conditional statements and data structures. Every element of the program was a direct reflection of their thoughts. The systems were precise and predictable, but at the same time fragile and unable to handle the ambiguities of the real world, such as facial recognition or understanding speech.

Software 2.0 is the fruit of the machine learning revolution. Instead of writing rigid rules, we started showing examples to the machine. We replaced code with data to a great extent. From craftsmen, we became teachers and curators of data, whose main task was to collect, clean and label huge training sets. This is precisely how systems capable of recognizing objects in photos, filtering spam or driving a car were developed. We created powerful, but often impenetrable “black boxes”.

Software 3.0 is introducing even deeper changes than the previous revolution. We program in natural language by engaging in dialogue with a model. Instead of writing code (as in 1.0) or curating data (as in 2.0), we simply describe the problem and communicate the expected outcome. We no longer write code but synthesize it on the fly through a powerful pre-trained model. The role of humans is changing again — we are becoming architects, editors and AI psychologists who must precisely formulate commands and critically assess the results. This drastically lowers the entry threshold, but at the same time raises new fundamental questions. It’s no longer just about whether the code works, but about how to trust something that was created in response to a few casual remarks. How to ensure its reliability, safety and predictability in critical applications?

LLM as the new mainframe computer

To help us understand this change, Karpathy suggests an intriguing analogy. According to it, today a large language model (LLM) functions like an entire computer. The very model, for instance GPT-4, is equivalent to a processor (CPU) — the central unit that executes all commands. The context window serves as RAM, storing current instructions and data required for operations. Meanwhile, tools and plugins, such as internet access, a calculator or a code interpreter, function like peripherals and drivers that allow the processor to communicate with the external world. In this metaphor, applications like Cursor or Perplexity become portable programs that can be run on different “operating systems” (AI models).

In Karpathy’s opinion, the current situation resembles the 1960s and the era of mainframe computers: powerful, centralized machines that users connected to via simple terminals and shared computing time among themselves. Today, these terminals are chat windows, and we share computing resources from OpenAI, Google or Anthropic.

Model psychology

One of the biggest challenges is the deeply unusual “psychology” of these new computers. Karpathy describes it as a fascinating and paradoxical combination of two film characters. On one hand, LLMs are like Raymond from “Rain Man” — brilliant savants with photographic, flawless short-term memory. Within an active contextual window, they can instantly process and correlate thousands of pages of text and solve problems in a way that is inaccessible to the human mind. They won’t forget a single detail that was provided to them. This genius, however, is fleeting and limited to the present moment. On the other hand, they resemble Leonard from “Memento” — they suffer from a severe form of amnesia. When context disappears, memory also fades. They lack a permanent, stable understanding of the world and are incredibly susceptible to suggestions. And since they are essentially prediction, not reasoning machines, they can be easily deceived. Just one subtly misleading hint is enough for them to start hallucinating and confidently generate convincing, yet completely fabricated information. Not even lies, but statistical echoes that sound correct and very credible, making them particularly insidious. Therefore, the only effective strategy to work with them is a simple rule: generate, then verify. The person must remain the pilot, leveraging the machine’s remarkable speed, but also providing critical thinking and ultimate judgment.

Autonomy slider and vibe coding

Instead of striving for complete, often fragile AI autonomy, Karpathy promotes a much more pragmatic idea — the “autonomy slider”. The idea is to create systems that work like the exoskeleton from the movie “Iron Man”, not replacing humans, but enhancing their abilities. In this model, AI becomes a powerful partner: it generates code, writes draft texts, analyzes data sets or creates graphic designs. But people remain at the core when it comes to making decisions. With one click, we accept, modify or reject the proposal. We have full control over the final outcome. This approach builds trust and allows users to gradually delegate more tasks.

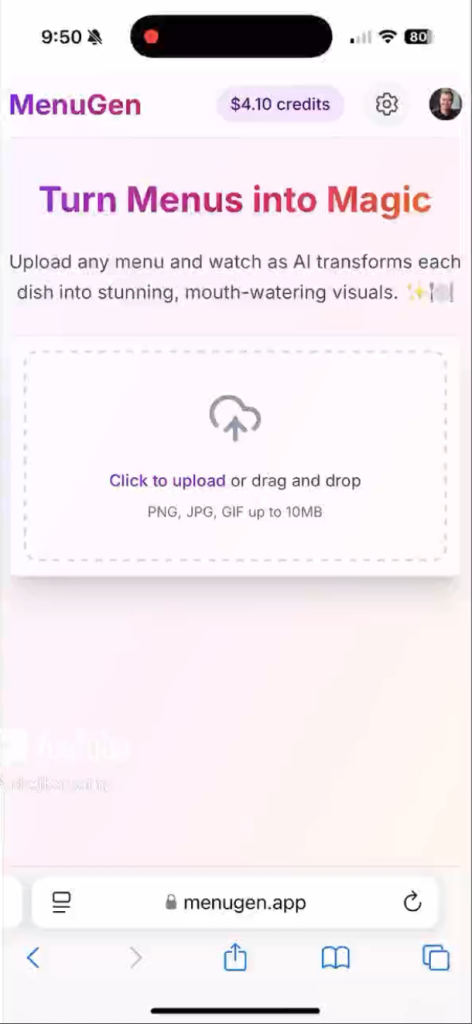

Karpathy conducted an experiment he called “Vibe Coding”. Over a single weekend, he decided to create a fully functional mobile app, even though he was not familiar with the Swift language. How did he do it? Instead of spending weeks learning syntax and reviewing documentation, he simply “talked” to the LLM as if it were an experienced fellow programmer. “Generate the main view with three buttons”, “This doesn’t work, try a different way”, “How do I center this element?”

Karpathy did not write manually even a single line of code for the “MenuGen” application. The experiment proved that knowing a programming language is no longer key today, unlike the skill of accurately describing a problem and critically assessing the proposed solution.

However, the experiment also pointed out a problem, which appeared during the attempt to integrate the application with payment systems, authentication and server configuration. The model, which excelled at generating isolated code, failed when faced with the entire surrounding complex and often poorly documented infrastructure.

A lesson from Tesla: the biggest gap is in infrastructure

The first prototype of Autopilot, which could travel several kilometers under ideal conditions, was developed relatively quickly and made a huge impression. However, the real work involved sealing the system to meet the needs of a chaotic reality. The focus was on handling the endless “long tail” of unusual cases: blinding sun at odd angles, partially worn road signs, and atypical behavior of pedestrians or cyclists. Today, the entire AI industry is grappling with this issue. Creating impressive demos that can write a poem or a piece of code under controlled conditions is simple. The real challenge that blocks mass implementation is the infrastructure gap. Karolina Ceroń wrote about that in the article “Are autonomous vehicles ready to take over the roads?”.

Read also: „Are autonomous vehicles ready to take over the roads?” by Karolina Ceroń

Aurora and Waymo are intensifying their tests and service development, but the transportation industry and society are still asking crucial questions: is technology really matching human judgment? And what if it isn’t?

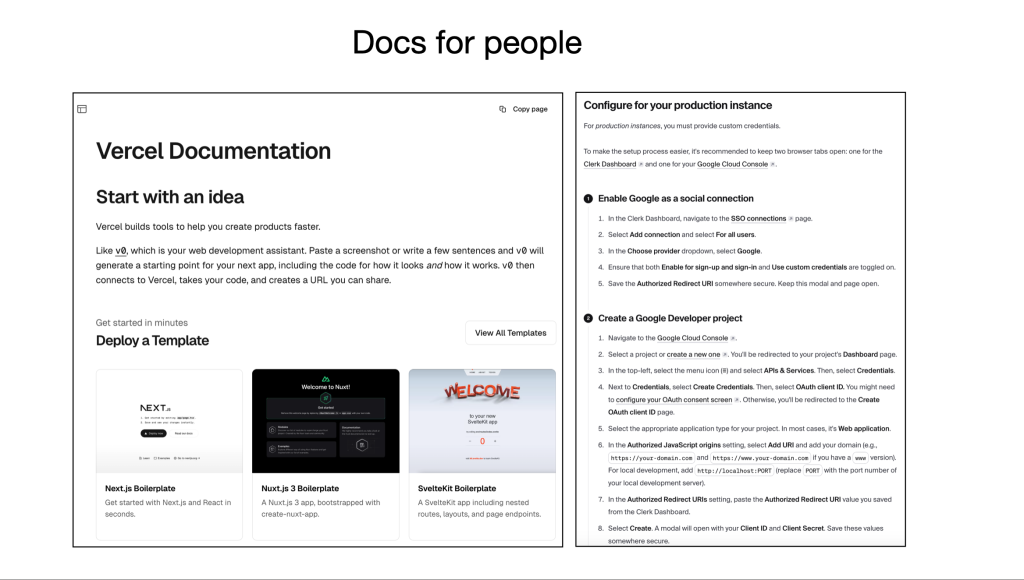

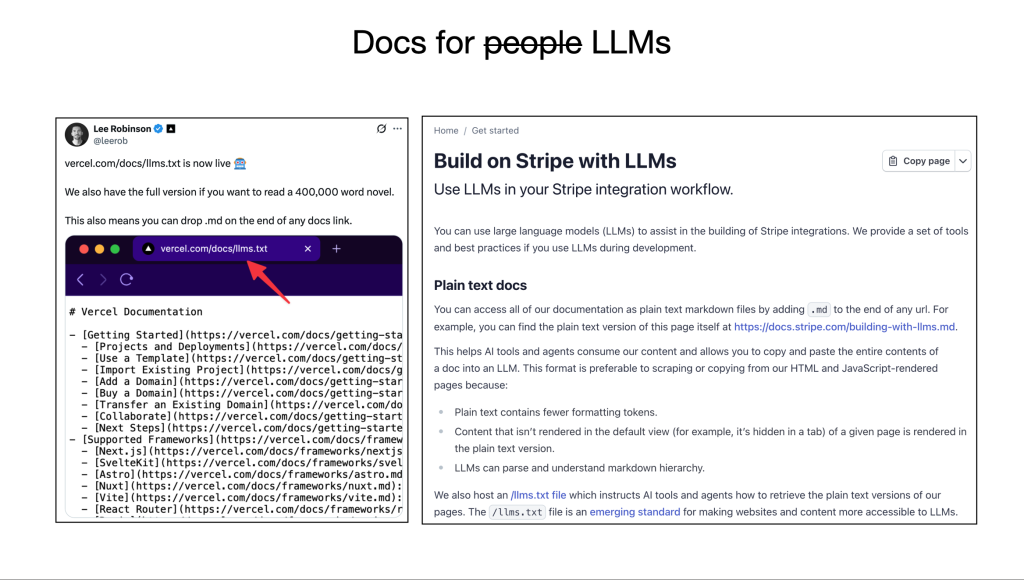

Models can generate code, but they are unable to operate in a digital world that was designed for humans. They can’t read unclear API documentation, which is full of ambiguities and examples that are only understandable to insiders. They can’t “click the blue button” because graphical interfaces rely on visual cues, not semantic structure. They can’t configure cloud services independently, as it requires navigating through complex processes of authentication, permissions and multi-step configurations.Therefore — as Karpathy predicts — the coming years will be dominated by a quiet, but crucial trend, which will involve creating AI-friendly infrastructure. It’s not even about new models, but about rebuilding the foundations. It’s about writing documentation not just for people, but primarily for machines — in simple, structured Markdown or as configuration files with clear instructions. API interfaces need to become more descriptive, and user interfaces should be enriched with metadata that explains their function to AI agents. The companies that first adapt their infrastructure for collaboration with autonomous agents will gain a massive advantage. They will enable a new wave of automation to operate on their platforms, opening up a completely new market and exponentially accelerating their own

We are in the year 1960. What’s next?

To sum up: Karpathy positions us at a specific point on the timeline. We are in the 60s of operating system development. What seems revolutionary today — monolithic models that we communicate with through a chat window — will look primitive in a decade. Just as no one in the 1960s could have predicted the emergence of smartphones, the internet or social media, we too are unable to fully grasp where the Software 3.0 era will take us. However, we can be certain that its impact will be at least of similar magnitude.

The greatest opportunities lie in creating entirely new classes of applications that radically democratize access to technology — from personal tutors that adapt to each child’s learning style, to scientific assistants capable of formulating and testing hypotheses based on massive datasets. At the same time, we need to be aware of the fundamental risks. High computational costs lead to dangerous centralization, which threatens the emergence of digital monopolies on intelligence. The vulnerability of models to errors and manipulations poses a threat of generating disinformation on a massive scale, which in turn can undermine social trust. Finally, the profound impact of automation on the job market requires us to fundamentally rethink education systems and social safety nets.

Karpathy’s message is a clear call to action. The enthusiasm is justified, but the real work now consists in building the essential, albeit “boring”, infrastructure that will allow AI to move from being a spectacular novelty to a daily, reliable utility. AI no longer plays the role of electricity, but of a computer. And it is in this computer that we will soon be building the future.