OpenAI’s CEO, Sam Altman, announced daily livestreams with presentations and demos – as he put it, “some big ones and some stocking stuffers”. The community responded immediately: “Samta Claus is coming to town? 🎅🎅🎅”, and the internet immediately erupted with speculation about what lies in OpenAI’s technological sack.

1/12 (05.12.2024 r.)

On the first day of the holiday marathon, OpenAI did not disappoint, presenting the full o-1 model alongside a new ChatGPT Pro subscription plan. This represents a significant expansion of capabilities compared to the preview version, particularly in terms of processing speed and ability to solve complex mathematical and programming tasks.

ChatGPT Pro – A New Offer for Demanding Users

The biggest surprise is the introduction of the ChatGPT Pro plan, priced at £200 per month. What do subscribers receive? First and foremost, unlimited access to the newest family of models, including:

- o1

- o1-mini

- GPT-4o

- Advanced Voice Assistant

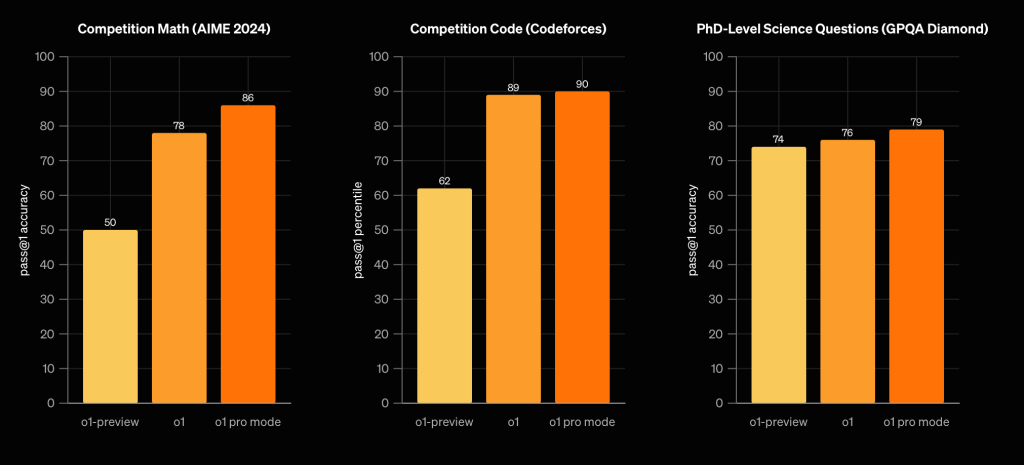

A key feature is the “pro mode”, which allows the model to take longer to “think” about its response. The results are impressive – in comparative tests, the model achieves significantly better results than the standard version:

- AIME 2024 Mathematics: 86% effectiveness (an 8 percentage point improvement)

- Programming (Codeforces benchmarks): 90% effectiveness

- Doctoral-level Scientific Analysis: 79% correct answers

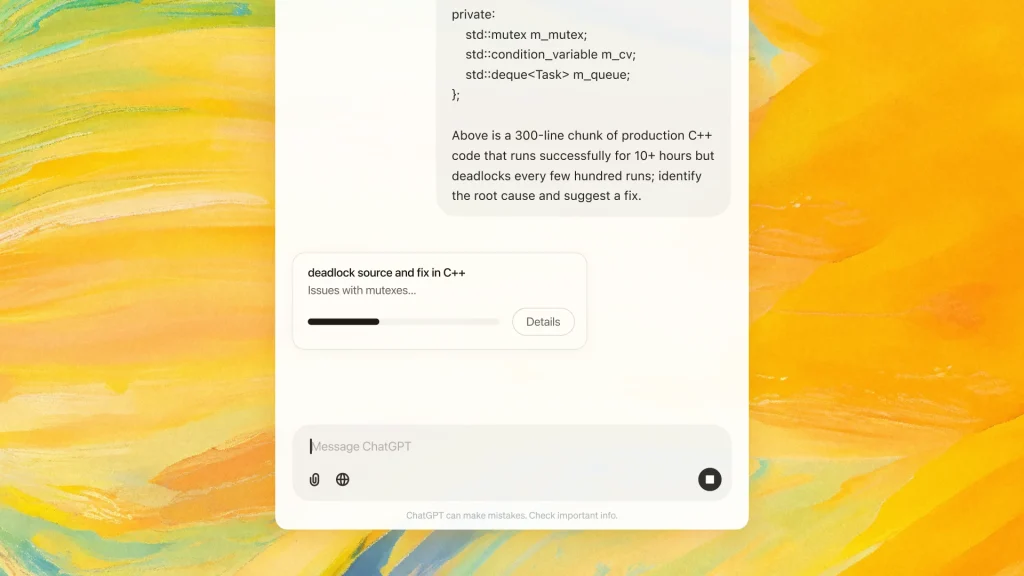

Compared to the current o-1 Preview model, the pro version requires more time for “thinking”, thus taking longer to generate responses. To accommodate this, Pro users will receive a special progress bar showing the AI’s “thinking” process. Additionally, users will receive a notification as soon as the model generates a complete response.

Support for Science

OpenAI hasn’t forgotten the scientific community. The company has announced a grant programme through which 10 medical researchers from leading American institutions will receive free access to the Pro plan. Among the beneficiaries are specialists researching rare diseases and dementia.

2/12 – O-1 RL Finetuning (12 December 2024)

Sam Altman maintains the “amazing” atmosphere by describing today’s presentation as “the biggest surprise of 2024”. Although Sam was absent during the stream, he commented on X (formerly Twitter) that he can’t wait to see what users will create. What’s it all about?

Reinforcement Learning (RL) with o-1 series models

During today’s meeting, OpenAI representatives announced the introduction of independent finetuning capabilities for o-1 series models based on Reinforcement Learning (RL). Until now, this method was only available internally. Previously, users could only fine-tune models using Supervised Learning.

By fine-tuning a smaller model, such as o-1 mini, for a specific task, it will be possible to achieve better results than with larger models like o-1. When tuning models, we will be able to train expert artificial intelligence that doesn’t require too much training data. This means not only greater efficiency but also lower costs.

The alpha version will be made available now for selected researchers, but broader access will be possible in the first quarter of 2025.

3/12 – Sora (9 December 2024)

The third discovery revealed Sora – an improved AI model that enables generating realistic and creative videos based on text descriptions. Sora can create videos up to 20 seconds long, maintaining high visual quality and consistency with user descriptions. This technology not only generates animations but also simulates complex real-world phenomena such as character movements, environmental changes, and interactions between objects.

Refreshed Sora now available. How does the tool work in practice?

Along with the debut of the new Sora Turbo model, OpenAI has also prepared a more refined interface that allows for much more extensive options for generating video materials. According to Sam Altman and his colleagues, the tool is available to all paid Plus and Pro plan users (except for users from the European Union). Under the cheaper subscription, users can generate up to 50 videos in 480p resolution or slightly fewer videos in 720p resolution monthly. In the more expensive plan, we have 10 times better capabilities, including higher 1080p quality and longer video materials.

The company has also announced that work is underway to better adjust the pricing so that each of us – except for EU and UK residents – can use Sora at an affordable price.

4/12 – Canvas (10 December 2024)

After Sora’s spectacular debut, it’s time for a smaller but equally useful improvement. Sam Altman called them “stocking stuffers” – small Christmas presents. We present Canvas, a feature that could transform how we work with ChatGPT.

Canvas was previously available in beta for Plus and Team plan users, but has now been rolled out to all ChatGPT plans. What new possibilities does it bring?

Text editing directly in the ChatGPT window

Canvas allows for corrections in selected text fragments without the need to transfer them to external editors. You can apply italics, bold text, or add comments to specific fragments. Moreover, the feature enables applying suggestions from comments in real-time – just one click is needed. An ideal solution for authors and editors!

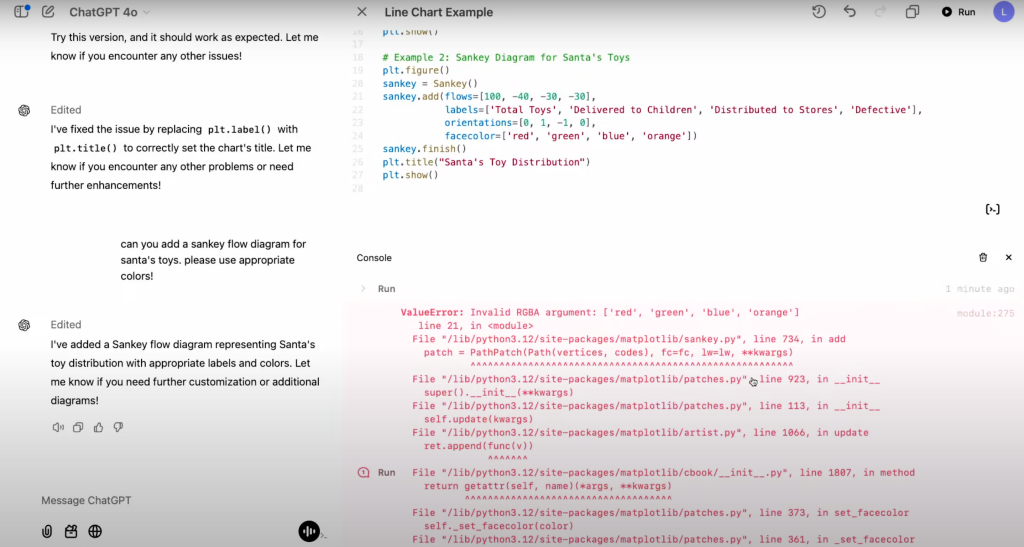

Built-in code editor

Canvas is also a nod to programmers. In the new editor, you can write, run, and fix code. The “Fix bug” feature enables immediate error correction, making Canvas direct competition for tools like Cursor or Claude’s Artifacts.

Integration with CustomGPTs

Thanks to Canvas, personalised assistants become even more functional. Ease of use and new capabilities make this feature significant support for both content creators and development teams.

Although Canvas might seem like a minor change, its impact on the comfort of working with ChatGPT is invaluable.

What Will the Coming Days Bring?

This is just the beginning of OpenAI’s holiday marathon. Over the next 11 working days, we can expect more innovations and improvements. We will continue to update this article with new information, so we encourage you to check our site regularly.

Translation from Polish: Iga Trydulska